Google AI account purge across YouTube, Gmail, AdSense, and Google Ads platforms has reached unprecedented scale in 2025, with automated enforcement systems serving as judge, jury, and executioner, terminating millions of accounts without meaningful human review or appeal pathways, fundamentally transforming how content creators, businesses, and everyday users interact with the world’s largest digital ecosystem. This systematic elimination reflects Google’s deployment of advanced artificial intelligence detection systems identifying policy violations, spam patterns, and automated content at an industrial scale, but with alarming collateral damage where legitimate creators lose decade-long channels generating six-figure revenues, families lose irreplaceable email archives spanning years, and businesses see advertising accounts frozen mid-campaign without explanation or recourse.

This comprehensive guide examines the technical architecture behind Google’s AI enforcement, answers the specific questions users ask when facing account terminations, provides survival strategies across all Google services, and reveals why the old advice of “just follow the rules” no longer protects you in an era where algorithmic interpretation of vague policies determines your digital survival.

Why Did My YouTube Channel Get Banned? The Real Reasons Behind Mass Terminations

YouTube channel terminations reached epidemic proportions in 2025, with creators reporting “warfare” on their channels as AI detection systems flag content that would have passed human review just months earlier. Matt Par, who has coached over 11,000 YouTubers throughout his decade on the platform, describes current enforcement as unlike anything he’s witnessed, with channels losing years of work and hundreds of thousands of dollars in potential revenue overnight. Understanding YouTube’s detection mechanisms requires examining both what gets flagged and why the appeal process fails to restore legitimate channels.

How YouTube Identifies AI-made Videos

YouTube’s detection architecture operates through multiple layered systems analyzing content, metadata, upload patterns, and channel history simultaneously to build “confidence scores” determining whether human review occurs or immediate termination proceeds. The voice fingerprinting system represents the most sophisticated detection method, analyzing audio spectral patterns, speech consistency, pronunciation uniformity, and phonetic signatures to identify AI-generated voices even when creators modify pitch, speed, or add background noise, attempting to mask synthetic origins. The system maintains databases of common AI voice generators, including ElevenLabs, Murf, Synthesia, and others, enabling instant recognition through voice signature matching.

Visual similarity algorithms scan uploaded videos frame-by-frame, comparing against channel history and broader YouTube content to calculate similarity percentages, flagging repetitive or templated content. A channel showing 47% thumbnail similarity across uploads triggers automatic review for template overuse rather than authentic brand consistency, while videos sharing identical or near-identical visual elements beyond certain thresholds get flagged as mass-produced content lacking originality. The system analyzes keyframe compositions, color schemes, font selections, and layout patterns, determining whether variation represents genuine creative evolution or automated template iteration.

Script analysis through natural language processing examines text uniqueness across videos, identifying channels recycling content structures, repeating information patterns, or generating scripts through automated tools without significant human modification. YouTube’s algorithms detect overly uniform language patterns, predictable sentence structures, and generic vocabulary choices characteristic of AI-generated text, particularly when comparing multiple videos from the same channel, revealing template-based production rather than original research and writing.

Upload Pattern Analysis

YouTube monitors upload frequency and timing patterns, identifying channels operating beyond human production capacity without proportional engagement, justifying rapid content generation. Uploading three or more videos daily for extended periods, particularly month-long stretches, triggers algorithmic scrutiny assessing whether production scale matches engagement metrics, audience growth, and content quality indicators. The system recognizes that human-produced content with genuine value typically requires research, scripting, filming, or editing, quality review, and promotional effort incompatible with daily multi-video uploads unless substantial teams support production.

Batch upload patterns where creators upload dozens of videos in single sessions, then maintain long periods without an upload signal automated content generation to YouTube’s detection systems. The algorithm flags accounts showing irregular upload cadences inconsistent with genuine creator behavior, particularly when combined with low engagement rates, suggesting audience disinterest in rapidly-produced content. Even channels passing individual video review face account-level termination when overall patterns indicate mass production violating YouTube’s authenticity standards.

How Titles, Descriptions, and Thumbnails Trigger Bans

Deceptive metadata represents one of YouTube’s explicitly prohibited violations with zero tolerance enforcement, yet creators frequently face terminations for metadata issues they never realized constituted policy violations. Clickbait titles promising specific outcomes not delivered in video content, thumbnails showing celebrities, public figures, or events in contexts they never occurred, and descriptions making claims unsupported by actual video content all trigger immediate flags under YouTube’s spam and deceptive practices policies.

The P Diddy trial misinformation campaign exemplifies metadata-driven terminations where 26 channels created 900 videos accumulating 70 million views through fabricated celebrity quotes, fake witness testimonies, and thumbnails showing Jay-Z, Usher, Brad Pitt, and Oprah in courtroom contexts that never existed. YouTube terminated 16 channels and demonetized others not merely for misinformation but for systematic metadata deception, presenting fabricated content as legitimate news coverage through carefully crafted titles and thumbnails designed to mislead viewers.

Why Was My Gmail Account Suspended?

Gmail account suspensions increasingly stem from YouTube channel terminations or Google Ads policy violations through cross-platform account linking, where single service violations trigger comprehensive account freezes affecting all Google services simultaneously. This domino effect destroys not just the specific service where the violation occurred but also emails, documents, photos, calendars, and years of irreplaceable personal data across the entire Google ecosystem.

The Inactivity Deletion Policy of Two Years to Permanent Account Loss

Google’s inactive account policy, announced in 2023 and aggressively enforced throughout 2025, specifies that personal Google accounts unused for two consecutive years face permanent deletion, including all associated Gmail messages, Google Photos content, Drive files, and YouTube activity. Users receive warning emails both to the inactive account and recovery email addresses six months before scheduled deletion, with final notices arriving as accounts approach termination dates.

The policy targets security vulnerabilities where abandoned accounts lack two-step verification and become 10 times more susceptible to hacker compromise than active accounts. However, enforcement creates hardship for users maintaining secondary accounts for specific purposes, test accounts for development work, or accounts belonging to deceased relatives whose families wish to preserve digital legacies. Email researcher Al Iverson documented receiving deletion warnings for test accounts used for seed list monitoring, with Google providing six-month recovery windows before permanent elimination on specified dates like October 15, 2025.

“Active use” preventing deletion encompasses signing into the account, reading or sending emails, watching YouTube videos, using Google Search while logged in, accessing Google Drive or Google Photos, or engaging with any Google service demonstrating human account activity. Business and educational accounts remain exempt from the inactivity policy, affecting only personal consumer Gmail accounts.

The Geographic and Behavioral Red Flags

Gmail suspends accounts demonstrating patterns that Google’s systems interpret as unauthorized access attempts, security compromises, or policy violations threatening platform integrity. Login attempts from unusual geographic locations, particularly international regions inconsistent with account history, trigger immediate security blocks requiring identity verification before restoring access. Multiple failed login attempts suggest brute-force hacking efforts; lock accounts pending ownership confirmation through recovery email verification, phone number authentication, or security question responses.

Sending patterns indicating spam or mass email campaigns raise automatic flags when accounts suddenly begin sending substantially more messages than historical baselines, particularly when recipients mark messages as spam or Gmail’s algorithms detect commercial content, unsolicited promotions, or malware distribution. New accounts sending large email volumes immediately after creation face heightened scrutiny, as spammers frequently create disposable Gmail accounts for bulk message campaigns before inevitable suspension.

Age verification failures represent another suspension trigger where Google determines account holders fall below minimum age requirements, typically 13 years in most jurisdictions, leading to immediate account locks until age verification through parental consent or identification documentation confirms compliance. Accounts associated with prohibited activities, including harassment, threats, illegal content distribution, or terms of service violations, face permanent suspension without recovery options.

How YouTube Bans Kill Gmail Access

YouTube creator Enderman experienced cross-service contamination when his 350,000-subscriber channel, built over nine years, faced termination after YouTube’s algorithm incorrectly linked his account to “Momiji plays Honkai: Star Rail Adventures,” a Japanese gaming channel banned for copyright strikes that Enderman insists he had no connection to whatsoever. Multiple other large creators, including Scratchit Gaming and 4096, reported identical terminations referencing the same Japanese account, revealing systematic algorithmic errors where false account associations trigger mass terminations of innocent channels.

YouTube’s detection systems map behavioral patterns, device fingerprints, IP addresses, payment information, and recovery emails, attempting to identify users creating alternate accounts, circumventing previous bans. However, the algorithm’s “high-confidence” decisions proceed to termination without human review, causing irreversible damage when false positives occur. Once YouTube terminates a channel, associated Gmail accounts face increased scrutiny for potential policy violations, while Google Ads accounts linked to the same user may freeze regardless of advertising compliance.

The July 2025 Policy Update: What “Inauthentic Content” Really Means

YouTube’s July 15, 2025, partner program guidelines introduced explicit language targeting “inauthentic content,” defined as material lacking significant originality and authenticity. YouTube’s head of editorial, Rene Ritchie, downplayed the update as merely clarifying longstanding policies identifying “mass-produced or very repetitive” content not providing value to viewers. However, the practical effect created an aggressive enforcement surge where channels producing AI-assisted content without substantial human creativity, originality, and value additions faced demonetization or termination.

The update mandates that content must be “significantly original and authentic,” remaining eligible for monetization, with “significant” representing the critical qualifier determining enforcement. Content solely generated through AI tools without meaningful human input, creative transformation, unique perspectives, or genuine value additions fails this standard regardless of technical quality. The policy targets creators using AI to mass-produce content at scale, prioritizing quantity over quality, which is great, particularly for those in sensitive niches, including news, politics, true crime, celebrity gossip, and making money online, where misinformation risks and deceptive practices concentrate.

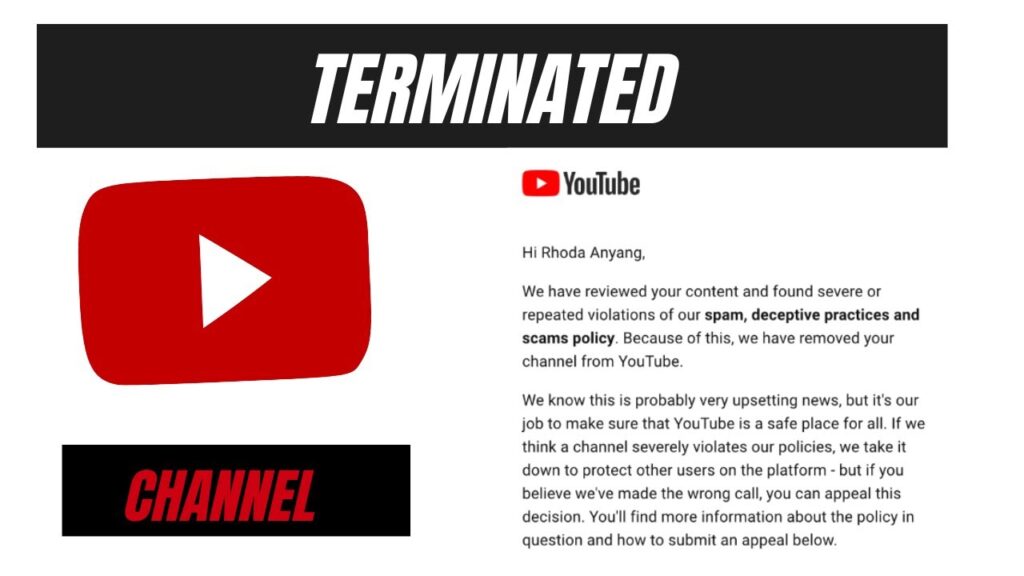

Why Did YouTube Terminate My Channel Without Warning? The Three-Strike Bypass

Traditional YouTube enforcement followed three-strike warning systems where community guideline violations generated strikes, with channels facing termination only after accumulating three strikes within 90-day periods, providing opportunities for course correction. However, Google considers certain violations sufficiently egregious, warranting immediate termination without prior warnings, strikes, or appeals, a policy that creators often don’t realize exists until experiencing sudden channel deletion.

What Gets Instant Termination

YouTube classifies spam, deceptive practices, and scams as egregious violations when they pose “serious risk of egregious real-world harm,” bypassing standard warning procedures proceeding directly to channel termination. The P Diddy misinformation campaign exemplifies this enforcement, where channels created fabricated testimonials, false celebrity involvement, and misleading trial coverage, potentially influencing public opinion about ongoing legal proceedings, triggering immediate mass terminations without warning strikes.

True Crime Case Files channel termination demonstrated similar instant enforcement, where creator “Paul” admitted generating 150 completely fabricated murder videos using ChatGPT scripts and AI-generated images, with one video describing a non-existent Colorado crime attracting 2 million views before journalists investigating public records exposed the hoax. YouTube terminated the channel for multiple guideline violations without warning strikes due to systematic deception posing reputational risks to the platform and actual harm through terrorizing communities with fake crime reports.

Make money online channels face elevated termination risk when titles, thumbnails, or content promise specific income amounts, positioning videos as guaranteed money-making opportunities rather than educational content about potential business strategies. Matt Par received two strikes on his channel, placing him one strike from total termination, despite creating legitimate educational content, because specific videos used titles and thumbnails that YouTube interpreted as promising income rather than demonstrating possibilities. The Federal Trade Commission and similar regulatory agencies pressure platforms to eliminate content making unsubstantiated earnings claims, leading Google to implement aggressive enforcement to protect itself from potential legal liability.

Why Responses Come in Minutes, Not Days

Rome Row experienced the appeal process failure firsthand when his celebrity news channel faced termination without warning, prompting immediate appeal submission explaining the content context and requesting human review. YouTube’s response arrived within minutes, impossibly fast for genuine human review of the entire channel content library, appeal text, and supporting evidence. The generic automated response claimed the channel was “probably hacked” with new changes violating community guidelines, ignoring Rome Row’s detailed explanations and context.

This rapid-fire rejection reveals YouTube’s appeal system now operates primarily through automated AI responses rather than human reviewers, with algorithms analyzing appeals against termination records making instant decisions whether restoration meets policy compliance. The system creates a catch-22 where algorithmic enforcement generates false positives, but algorithmic appeal review validates original enforcement decisions, eliminating meaningful human oversight and correcting errors.

Enderman described his termination as the “end of an era” unless “divine human intervention” could reverse AI-driven mistakes, acknowledging that reaching actual human reviewers at YouTube requires exceptional circumstances beyond standard appeal processes. Creators across industries report nearly identical experiences: sudden terminations, rapid appeal rejections, and inability to access human reviewers who might understand context, evaluate fair use arguments, or recognize algorithmic errors.

What Triggers AdSense Account Suspension?

Google AdSense account suspensions interconnect with YouTube monetization, Google Ads compliance, and overall account standing, creating an enforcement web where violations in one service cascade across the entire Google ecosystem. StubGroup’s analysis reveals 5-10% of suspended Google Ads accounts face flags for multiple policy violations, with circumventing systems (attempting to bypass Google restrictions) and unacceptable business practices (conducting prohibited activities) representing the most common egregious violations Google considers worthy of immediate suspension without prior warning.

How YouTube Violations Kill AdSense Revenue

YouTube’s July 2025 inauthentic content policy update directly impacts AdSense eligibility, as channels generating primarily AI-produced content without substantial human value additions face demonetization, ending AdSense revenue streams regardless of view counts or subscriber bases. The policy states content must be “significantly original and authentic,” maintaining monetization eligibility, with enforcement targeting channels producing mass quantities of repetitive, templated, or AI-generated content viewers consider spam.

Channels built entirely on AI voiceovers with stock footage, Reddit story compilations, meditation videos without guided voiceovers, or celebrity gossip using AI-generated thumbnails and fabricated quotes face systematic demonetization as YouTube’s detection identifies patterns indicating automated production lacking genuine human creativity. Even channels with substantial subscriber bases and view counts lose monetization when algorithmic analysis determines content fails authenticity standards, regardless of audience engagement metrics.

The How to AI channel termination exemplifies this enforcement, where creator Leo built a legitimate educational channel generating over $1 million annually, teaching AI strategies to hundreds of thousands of viewers, yet faced termination when YouTube flagged specific videos for policy violations Leo claims resulted from overly aggressive enforcement rather than actual misconduct. The case demonstrates that even successful, value-adding channels face existential risk when algorithms interpret content as violating evolving authenticity standards.

Checking the Box That Saves Your Channel

YouTube implemented mandatory disclosure requirements starting May 2025, requiring creators to indicate when content includes realistic AI-generated or altered elements appearing authentic but not reflecting actual events. The disclosure process operates through a simple checkbox in YouTube Studio during video upload, with creators selecting the “altered content” option when videos contain AI-generated realistic elements, including:

- Real persons appearing to say or do things they didn’t actually say or do

- Altered footage of real events or places makes them appear different from reality

- Generated realistic-looking scenes or events that didn’t actually occur

- Synthetic voices created for real people without their participation

The requirement specifically targets content that could mislead viewers about reality, with sensitive topics including elections, public health crises, and ongoing legal matters receiving additional prominent labels directly on video players, alerting viewers to altered content. Creators failing to check disclosure boxes when applicable face one of the biggest termination risks in 2025, as YouTube’s detection systems identify realistic AI-generated elements compared against disclosure settings, flagging undisclosed AI content as deceptive practices worthy of channel strikes or immediate termination.

Matt Par emphasizes this represents an easily avoidable termination cause, yet creators frequently overlook disclosure requirements either through ignorance or intentional evasion, hoping to avoid labeling that might reduce view counts. The irony: proper disclosure typically doesn’t harm video performance, while a lack of disclosure when AI elements exist creates catastrophic channel termination risk.

Can I Appeal Google Account Termination?

Google account termination appeals face systematic barriers, making meaningful human review functionally impossible for the vast majority of users experiencing automated enforcement errors. The appeal architecture reflects Google’s scale challenges, where millions of accounts, billions of content pieces, and trillions of interactions occur daily, making individual human review economically infeasible despite the catastrophic personal impact account terminations create.

The Automated Appeal Response Loop

Documented cases consistently show appeal responses arriving within minutes of submission, revealing automated systems processing appeals rather than human reviewers examining evidence, considering context, or evaluating fair use arguments. Rome Row’s minutes-long rejection, Enderman’s formulaic responses, and countless other creators reporting identical experiences demonstrate a systematic pattern where algorithms validate original enforcement decisions, creating a circular logic trap: AI flags content, AI reviews appeal, AI confirms AI was correct.

The automated system analyzes appeal text against termination records using natural language processing and pattern matching, determining whether appeals present genuinely new information contradicting original violation findings. However, the threshold for meaningful new information requires explicit admissions that the original enforcement was algorithmically erroneous; information submitters rarely possess, and algorithms resist acknowledging, creating built-in bias favoring enforcement validation over error correction.

How to Identify Human vs AI Responses

Google’s appeal responses follow predictable templates revealing automated generation through generic language, lack of specific case details beyond violation type, absence of nuanced policy interpretation, and formulaic structure appearing across thousands of rejected appeals. Human-reviewed appeals typically reference specific content elements, cite relevant policy sections with contextual interpretation, acknowledge ambiguities requiring judgment calls, and demonstrate engagement with the submitter’s arguments even when ultimately rejecting appeals.

Automated responses, conversely, use vague language like “violated community guidelines,” “appears to be hacked,” “detected prohibited activity,” or “found inauthentic content” without explaining what specifically triggered flags, which content caused violations, or how submitters might modify behavior to prevent future issues. The lack of actionable feedback, combined with instant rejection timelines, signals automated processing in which appeals serve merely as a policy compliance ritual rather than genuine review mechanisms that protect against false positives.

The Human Review Exception: Google AI Account Purge

Certain circumstances trigger actual human review, including:

- Media coverage is creating public pressure for the examination of the case

- Legal threats from well-resourced parties able to pursue litigation

- Obvious platform errors affecting high-profile verified accounts

- Systematic bugs are generating mass false positives, requiring fixes

- Internal employee advocacy when staff personally know affected users

Enderman’s hope for “divine human intervention” acknowledges that reaching actual human reviewers requires exceptional circumstances beyond normal users’ access. Viral social media campaigns, journalist investigations like Elizabeth Hernandez exposing True Crime Case Files fabrications, or technology influencer coverage of termination waves occasionally generate sufficient pressure forcing platform response.

However, the overwhelming majority of legitimate users facing algorithmic enforcement errors never achieve the visibility or advocacy necessary to trigger human review, instead experiencing permanent account loss without a genuine appeal opportunity despite following policies to the best of their knowledge and ability.

How to Avoid YouTube AI Content Ban

Surviving YouTube’s AI enforcement purge requires understanding detection systems’ architectural limitations and optimizing content production around verifiable human value additions that algorithms can’t easily replicate or dismiss. The old advice of “follow the rules” proves insufficient when vague policies like “provide significant originality and authenticity” undergo algorithmic interpretation without consistent standards across similar content.

Adding Sufficient Human Input

YouTube’s detection thresholds suggest content requiring at least 30-40% modification from AI-generated bases or templates to demonstrate substantial human creative input. This manifests through:

Script Transformation: AI-generated scripts require comprehensive editing, adding unique perspectives, personal examples, original research, conversational voice, humor, or personality, and structural reorganization rather than surface-level word substitutions.

Visual Customization: Templates and stock footage demand significant modification through custom graphics, original footage additions, creative transitions, color grading adjustments, and compositional reimagining rather than merely swapping text or single images.

Voice Personalization: AI voices benefit from mixing with human narration sections, adding personality through inflection and pacing variations impossible for current AI, including genuine mistakes and spontaneous asides, or switching to actual human narration for full authenticity.

The transformation rule recognizes AI as a legitimate production tool when combined with meaningful human creativity, distinguishing between using AI to automate tedious tasks while dedicating human energy to unique insights versus mass-producing templated content with minimal effort.

Making Content Worth Watching

YouTube’s enforcement fundamentally targets content that viewers consider spam-repetitive, low-effort, providing no meaningful value, and justifying its existence. The platform incentivizes creators asking before each upload: “Does this video provide something valuable viewers can’t get elsewhere? Does it educate, entertain, inspire, or inform in ways justifying 10 minutes of someone’s limited attention?”.

Content surviving algorithmic review typically demonstrates:

Original Research: Information synthesis from multiple sources rather than regurgitating single articles

Unique Perspectives: Personal experiences, specialized expertise, or novel angles on common topics

Genuine Commentary: Analysis, critique, or insight beyond surface-level description

Entertainment Value: Humor, storytelling, personality, or production quality justifying viewer engagement

Practical Application: Actionable information viewers can implement rather than generic advice

Channels like Ali Abdaal’s demonstrate successful AI integration using automation for research, script drafts, and editing efficiency while dedicating human creativity to storytelling, personality development, and unique perspectives, distinguishing content from competitors. This strategic AI use passes YouTube’s authenticity tests by demonstrating that AI serves as a tool amplifying human creativity rather than replacing it.

Where Extra Caution is Required

Certain niches attract heightened enforcement scrutiny due to misinformation risks, legal implications, or spam concentration, making them functionally banned despite no explicit niche prohibitions:

True Crime: Fabricated crime coverage poses obvious risks post-True Crime Case Files, requiring meticulous source verification and clear fiction labeling when creating narrative content.

News and Politics: Sensitive topics, including elections, trials, and public health, demand absolute accuracy with immediate termination risk for AI-generated misinformation.

Make Money Online: Earnings claims in titles or thumbnails create termination vulnerability regardless of legitimate content, requiring careful framing emphasizing education over guarantees.

Celebrity Gossip: AI-generated celebrity likenesses, fabricated quotes, or misleading thumbnails trigger deceptive metadata enforcement.

AI Slop Channels: Reddit story compilations, revenge narratives, relaxation videos without guided narration, and compilation content face systematic targeting.

Creators in these niches require exceptional diligence, providing genuine value, verifying accuracy, disclosing AI elements properly, and demonstrating obvious human involvement, surviving algorithmic scrutiny.

Conclusion: Surviving the Algorithm or Building Outside Google’s Walls

Google AI account purge across YouTube, Gmail, AdSense, and interconnected services reflects platform evolution prioritizing automated enforcement at scale over individual user experiences, creating a digital ecosystem where algorithmic interpretation of vague policies determines who keeps decade-long accounts and who loses irreplaceable data overnight. The enforcement architecture of automated detection without consistent human review, combined with automated appeals validating original AI decisions, creates a self-reinforcing system resistant to error correction despite obvious false positives affecting legitimate users.

Survival requires understanding technical detection mechanisms, including voice fingerprinting, visual similarity analysis, metadata evaluation, and pattern recognition, then optimizing content production, demonstrating sufficient human value additions that algorithms can’t easily dismiss. The 30% transformation rule, value addition requirement, disclosure compliance, and niche caution collectively reduce termination risk, though no strategy provides absolute protection when algorithmic interpretation of subjective standards like “significant originality” determines outcomes.

However, the broader strategic question extends beyond surviving Google’s purges: should creators, businesses, and users continue building digital lives entirely within ecosystems where a single company controls access through opaque automated systems lacking meaningful oversight or appeal processes? The growing creator exodus to platforms including TikTok, Instagram Reels, and emerging alternatives reflects recognition that diversification across multiple platforms reduces catastrophic risk where single termination destroys years of work.

For users facing immediate termination threats, the priority hierarchy involves backing up all Google account data immediately using Google Takeout before access disappears, submitting appeals knowing automated rejection likely but creating paper trail for potential human review if situation escalates, documenting everything including screenshots of content, termination notices, and communication for potential legal action or public advocacy campaigns, and establishing presence on alternative platforms protecting against total platform dependency.

Whether Google’s AI enforcement purges represent a necessary evolution protecting platform integrity against spam and misinformation or overreach destroying legitimate creators caught in overly aggressive automated systems, likely depends on which side of the termination notice you experience. What remains undeniable: the era of assuming policy compliance and good-faith effort to protect your Google accounts has ended, replaced by the reality that survival increasingly depends on understanding algorithmic enforcement mechanisms and optimizing for machine evaluation rather than human judgment.

Disclaimer: iMali delivers independent analysis of digital platform economics, content monetization systems, and technology policy developments affecting creators, businesses, and digital services. Our coverage examines platform enforcement mechanisms, algorithmic content moderation, digital account security, and monetization policy changes shaping modern digital economy participation. From YouTube monetization to payment processing compliance, iMali provides independent self research and insights enabling informed navigation of rapidly evolving digital platform ecosystems.